Introduction:

In the dynamic landscape of cloud computing, ensuring the performance and reliability of APIs is paramount for delivering a seamless experience to users. Azure API Management (APIM) offers robust features to optimize API performance, and one crucial aspect is the implementation of load balancing and failover across multiple backends. In this article, we will explore how to configure load balancing and failover in Azure API Management, specifically focusing on scenarios with three different backends, such as Azure Functions slots.

Understanding Load Balancing in Azure API Management:

Load balancing distributes incoming API requests across multiple backend endpoints to optimize resource utilization, enhance scalability, and mitigate potential downtimes. Azure APIM allows you to configure multiple backend endpoints for an API, and XML policies can be leveraged to define load balancing behavior.

Configuring Backend Endpoints:

1. Navigate to the API Management Instance:

– Access the Azure portal and locate your API Management instance.

2. Configure Backend Endpoints:

– In the API’s settings, navigate to the “Backend” tab and add three or more backend endpoints corresponding to your Azure Functions slots.

Load Balancing Policies:

1. Define Load Balancing Conditions:

– Use the `<choose>` policy to define conditions based on request parameters, headers, or any relevant criteria.

2. Set Backend Services:

– Utilize the `<set-backend-service>` policy to dynamically switch between different backend services based on the defined conditions.

Health Probes and Failover:

1. Configure Health Probes:

– Implement health probes to periodically check the health of each backend service.

2. Automated Failover:

– APIM can automatically failover to a healthy backend in case a service becomes unavailable or unhealthy.

Example XML Policy for Load Balancing:

<inbound>

<base />

<choose>

<when condition="@(context.Request.Url.Path.Contains("slot1"))">

<set-backend-service base-url="https://backend-slot1.azurewebsites.net" />

</when>

<when condition="@(context.Request.Url.Path.Contains("slot2"))">

<set-backend-service base-url="https://backend-slot2.azurewebsites.net" />

</when>

<otherwise>

<set-backend-service base-url="https://backend-slot3.azurewebsites.net" />

</otherwise>

</choose>

</inbound>

Validating Performance: Executing and Analyzing API Scenarios

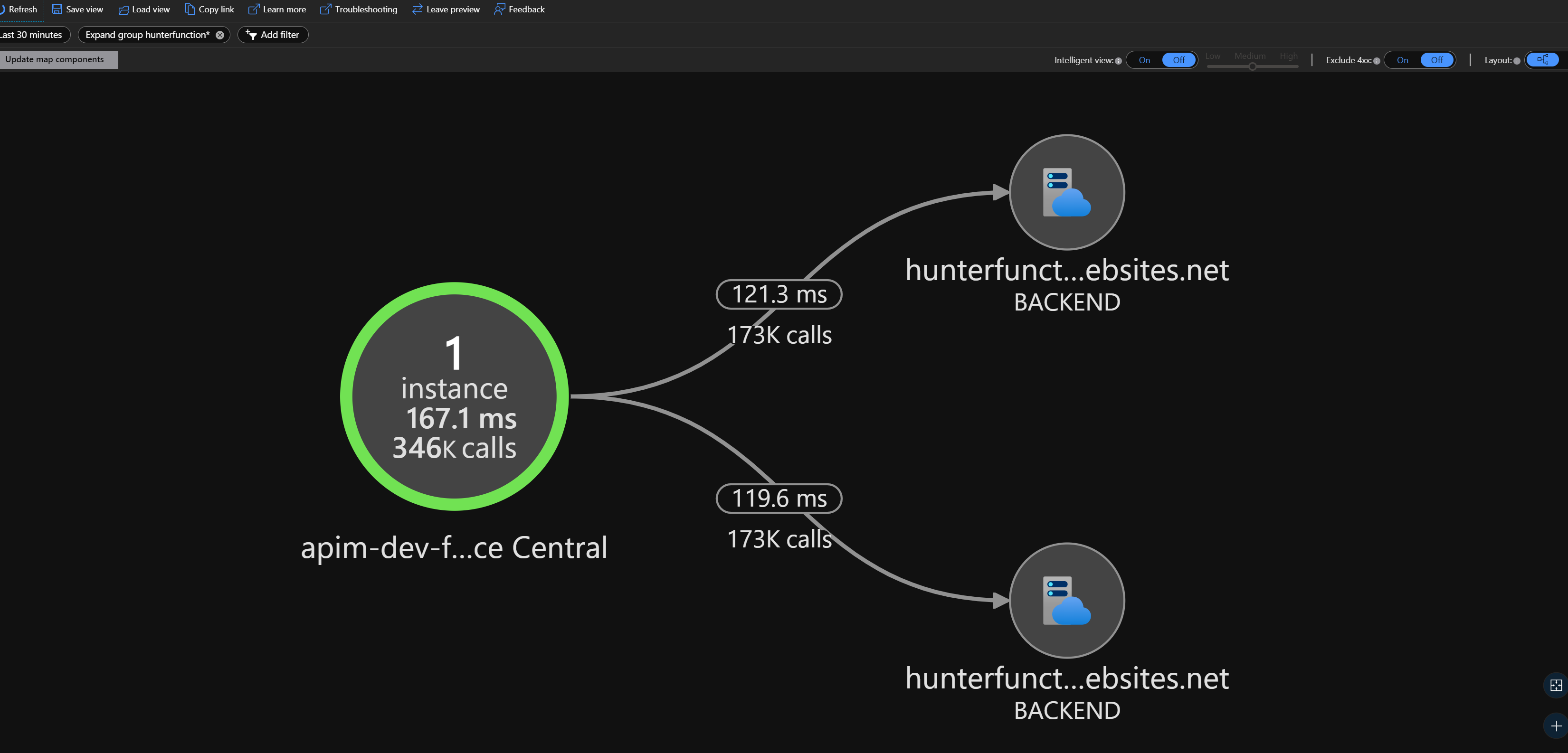

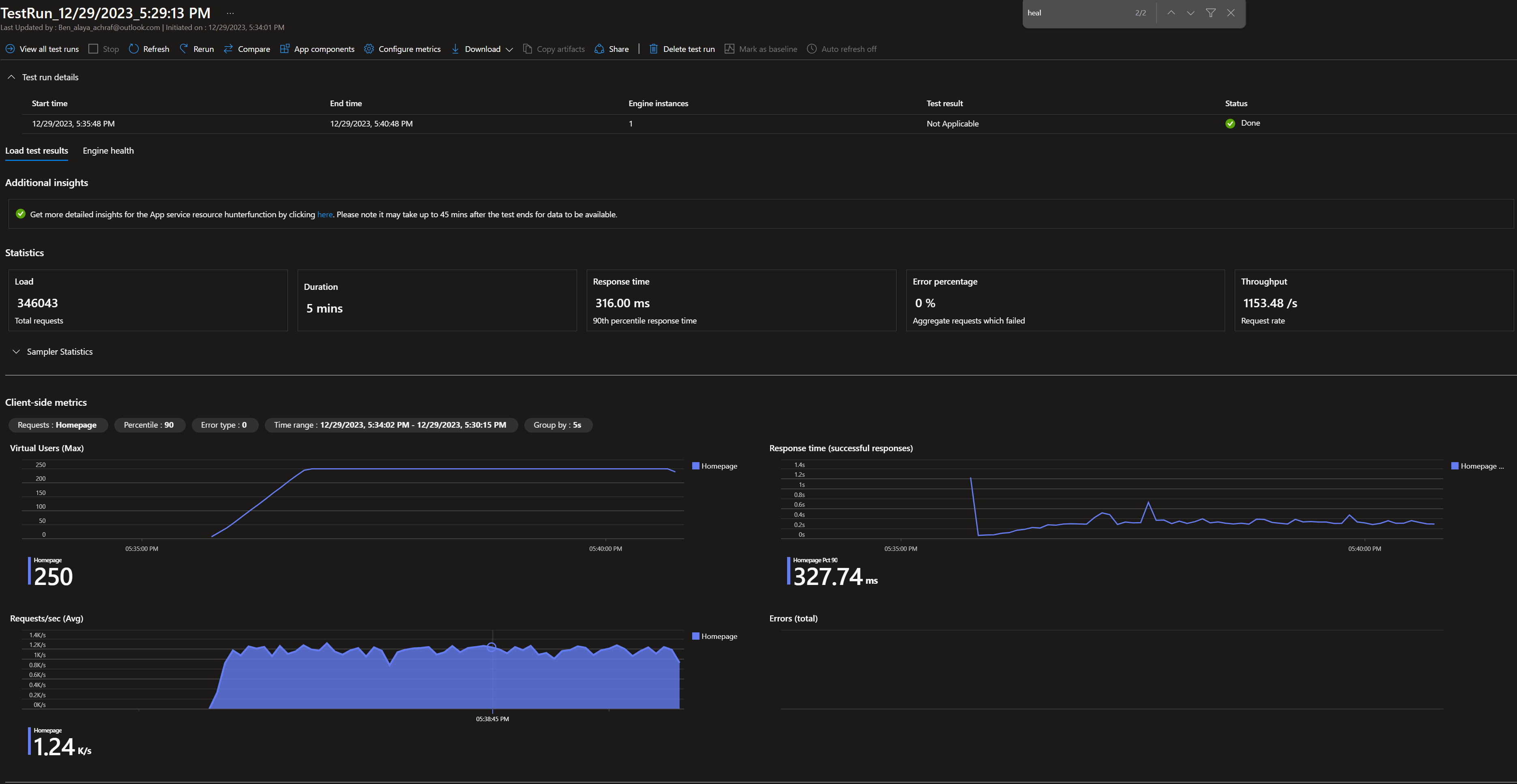

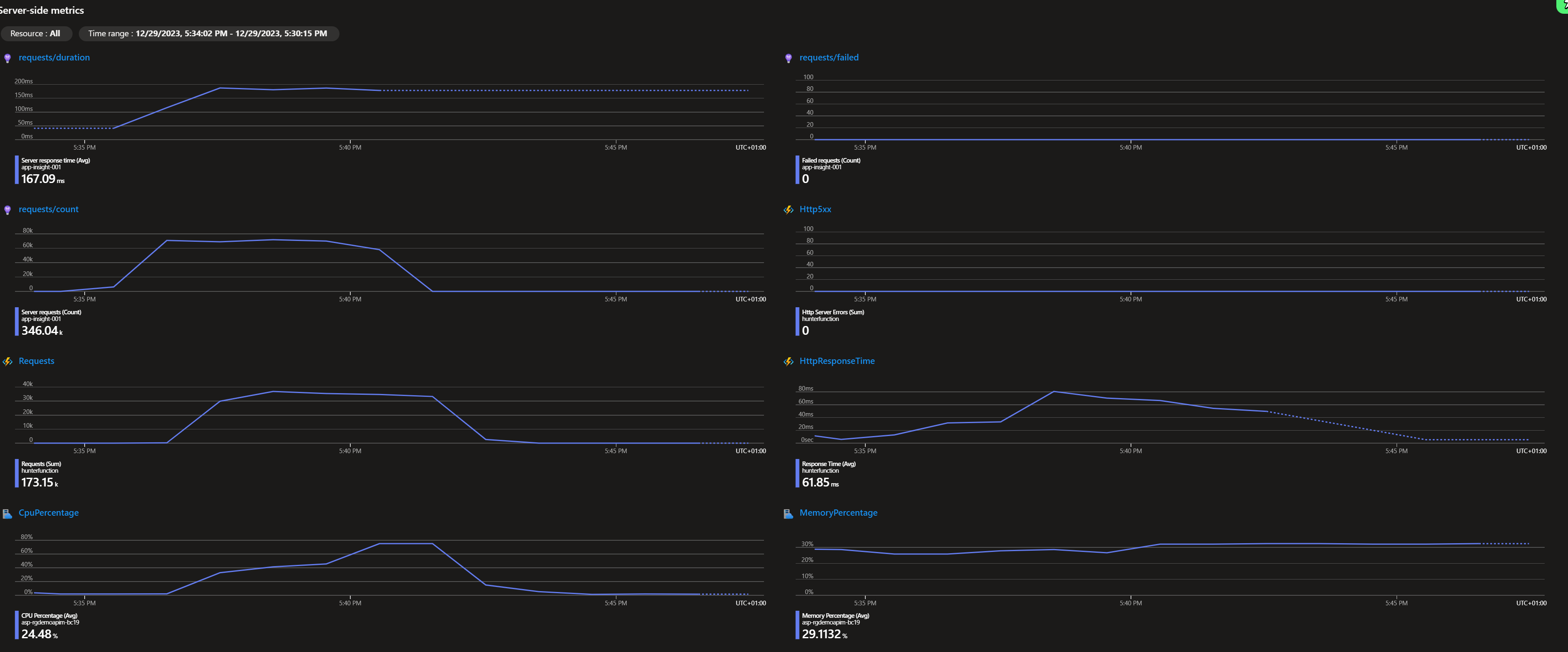

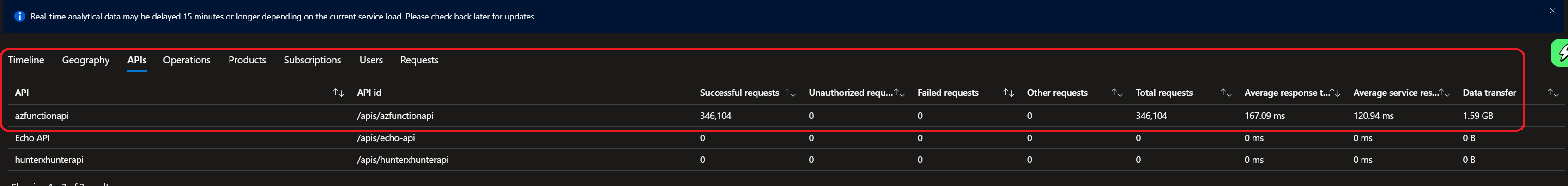

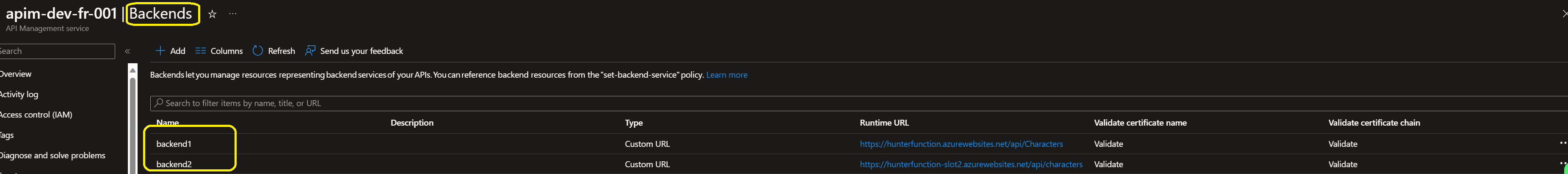

In pursuit of my specific scenario and for meticulous testing purposes, I meticulously crafted an API Management instance (Developer version) alongside an Azure Function featuring two distinct slots. Subsequently, I orchestrated a comprehensive load test encompassing a total of 346,043 requests, employing the Azure Load Test tool. The ensuing results are as follows:

application insights azure :

Azure Load Testing

azure apim

azure apim Backends

Backends Scripts Used :

Scripts Used :

<policies>

<inbound>

<base />

<!-- Managed Identity Authentication for the first resource -->

<authentication-managed-identity resource="" />

<cache-lookup-value key="backend-counter" variable-name="backend-counter" />

<choose>

<when condition="@(!context.Variables.ContainsKey("backend-counter"))">

<set-variable name="backend-counter" value="0" />

<cache-store-value key="backend-counter" value="0" duration="100" />

</when>

<otherwise>

<choose>

<when condition="@(int.Parse((string)context.Variables["backend-counter"]) == 0)">

<set-backend-service backend-id="backend1" />

<set-variable name="backend-counter" value="1" />

<cache-store-value key="backend-counter" value="1" duration="100" />

</when>

<otherwise>

<set-backend-service backend-id="backend2" />

<set-variable name="backend-counter" value="0" />

<cache-store-value key="backend-counter" value="0" duration="100" />

</otherwise>

</choose>

</otherwise>

</choose>

</inbound>

<backend>

<retry condition="@(context.Response.StatusCode >= 500 || context.Response.StatusCode >= 400)" count="6" interval="10" first-fast-retry="true">

<choose>

<when condition="@(context.Response.StatusCode >= 500 || context.Response.StatusCode >= 400)">

<choose>

<when condition="@(int.Parse((string)context.Variables["backend-counter"]) == 0)">

<set-backend-service backend-id="backend1" />

<set-variable name="backend-counter" value="1" />

<cache-store-value key="backend-counter" value="1" duration="100" />

</when>

<otherwise>

<set-backend-service backend-id="backend2" />

<set-variable name="backend-counter" value="0" />

<cache-store-value key="backend-counter" value="0" duration="100" />

</otherwise>

</choose>

</when>

</choose>

<forward-request buffer-request-body="true" />

</retry>

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>

Details :

Inbound Section:

1. Managed Identity Authentication:

– Utilizes Managed Identity Authentication for our Azure function .

2. Caching:

– Performs a cache lookup for the value associated with the key “backend-counter” and stores it in the variable named “backend-counter.”

3. Conditional Routing:

– Utilizes a conditional logic structure to determine the appropriate backend service based on the value of “backend-counter.”

– If “backend-counter” is not present in the context variables, it initializes and stores it in the cache.

– Alternates between two backend services (“backend1” and “backend2”) based on the value of “backend-counter.”

Backend Section:

1. Retry Logic:

– Implements a retry mechanism for requests that result in HTTP status codes 500 (Server Error) or 400 (Client Error).

– Retries the request up to six times with a 10-second interval between retries.

– Dynamically switches between backend services based on the “backend-counter” value in case of certain errors.

On-Error Section:

1. Base Handling:

– Inherits base error handling functionality.

Overall Purpose:

– The policy orchestrates conditional routing between two backend services, promoting load balancing and fault tolerance.

– Employs caching to optimize performance by storing and retrieving the “backend-counter” value.

– Implements a retry mechanism to enhance resilience in the face of errors, dynamically adjusting the backend service based on the error condition.

This policy is designed to enhance the reliability, performance, and flexibility of API interactions within the Azure API Management environment.

Conclusion:

In conclusion, load balancing and failover are essential strategies to enhance the performance and reliability of APIs in Azure API Management. By configuring multiple backend endpoints and leveraging XML policies, developers can effectively distribute traffic, optimize resource utilization, and ensure high availability. Implementing health probes further automates the failover process, guaranteeing a seamless experience for API consumers. As organizations embrace cloud-native architectures, mastering these techniques becomes instrumental in delivering scalable and robust APIs.

Comments 1