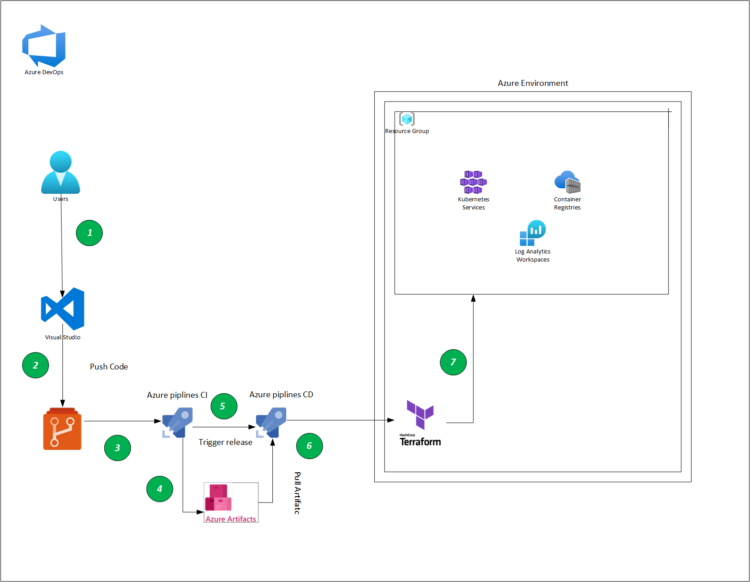

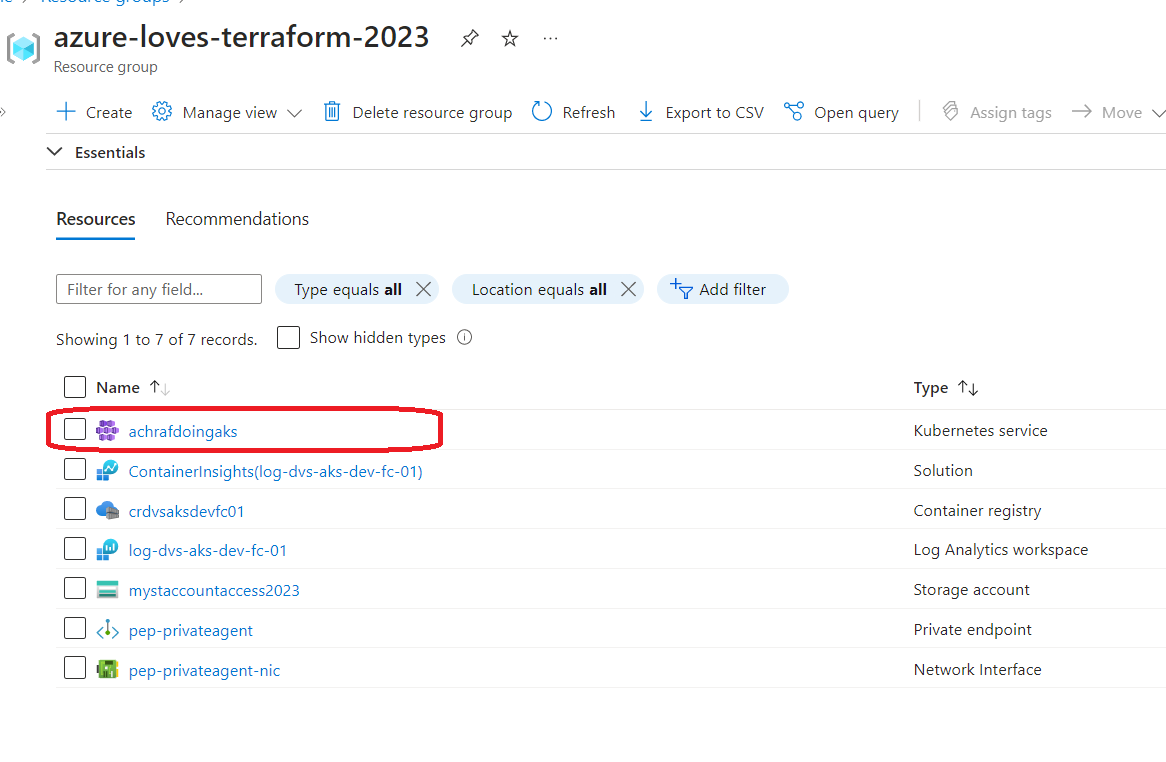

As we continue writing this serie , we come to the part were we are going to deploy our Azure Kubernetes Service (AKS) using terraform and we will be using Azure Devops in order to deploy into Microsoft Azure .

This article is a part of a series:

- Part 1 : How to setup nginx reverse proxy for aspnet core apps with and without Docker compose

- Part 2 :How to setup nginx reverse proxy && load balancer for aspnet core apps with Docker and azure kubernetes service

- Part 3 : How to configure an ingress controller using TLS/SSL for the Azure Kubernetes Service (AKS)

- Part 4 : switch to Azure Container Registry from Docker Hub

- Part 5-A: Using Azure DevOps, Automate Your CI/CD Pipeline and Your Deployments

- Part 6 : Using Github, Automate Your CI/CD Pipeline and Your Deployments

- Part 7 : Possible methods to reduce your costs

1-Creating our CI Pipeline

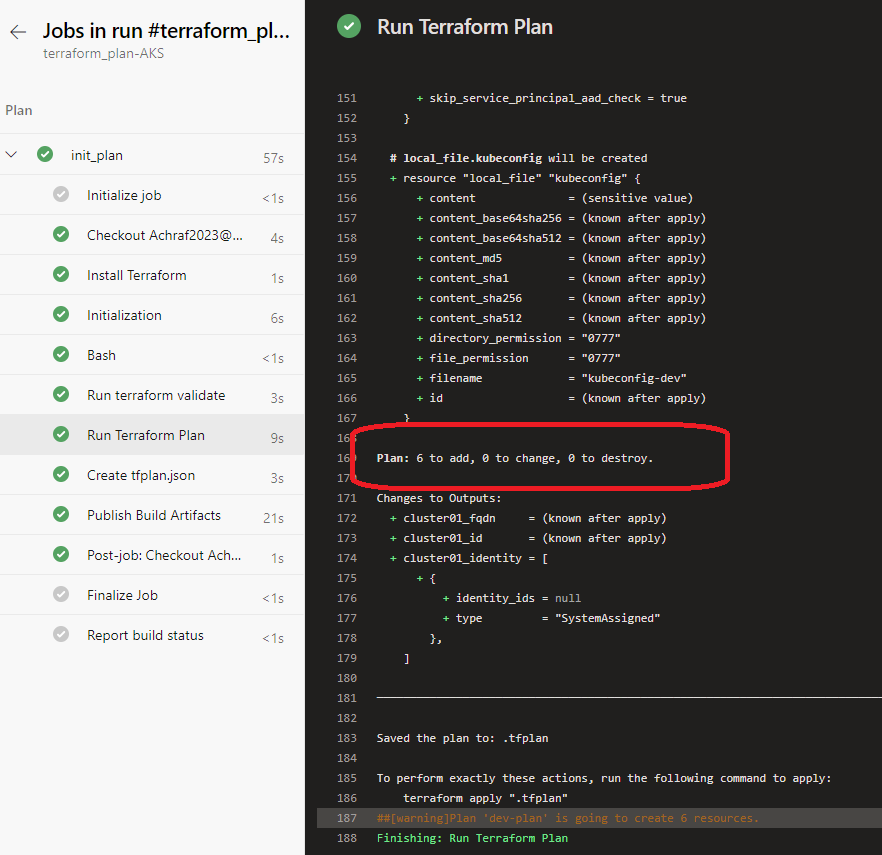

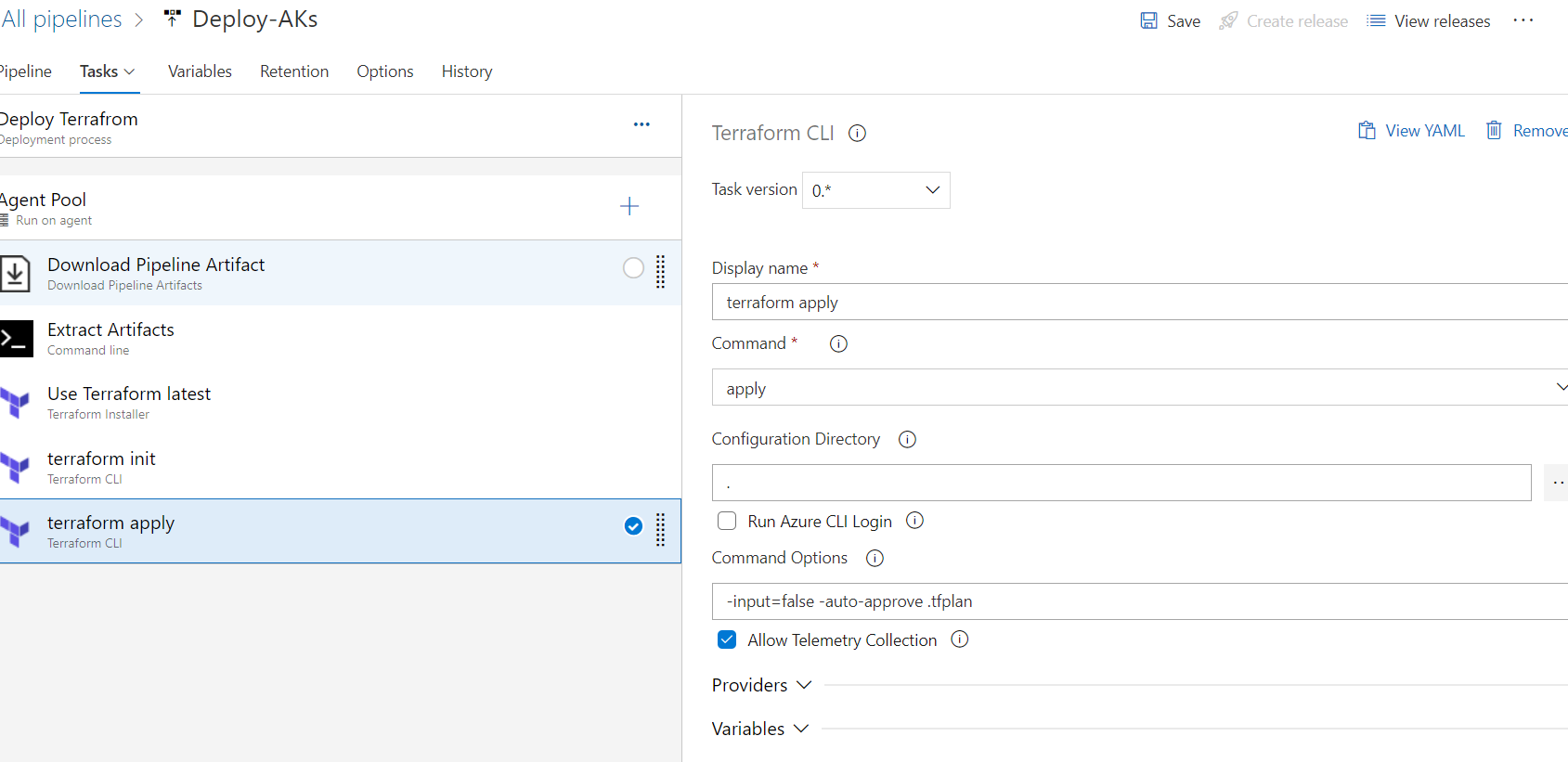

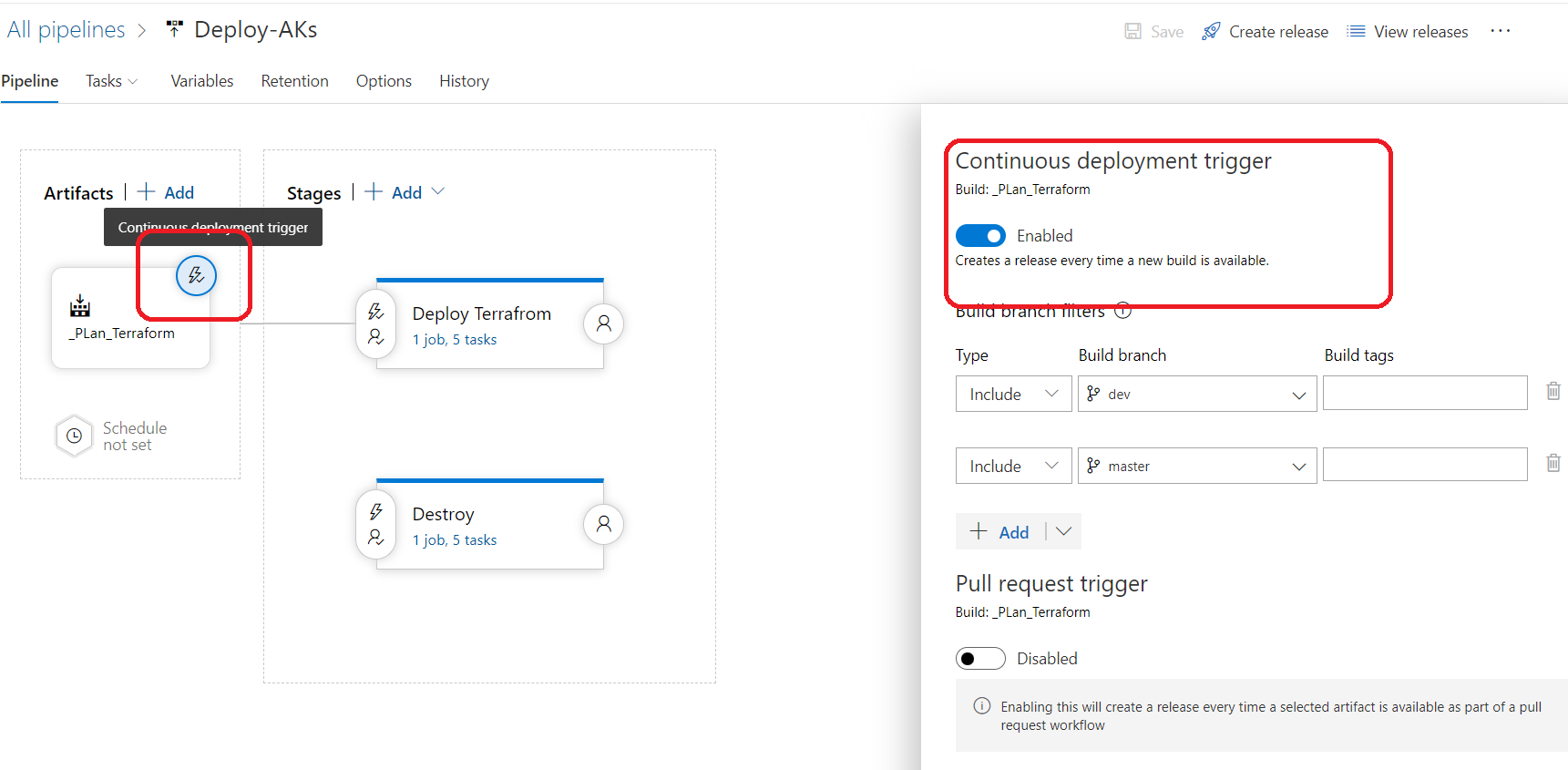

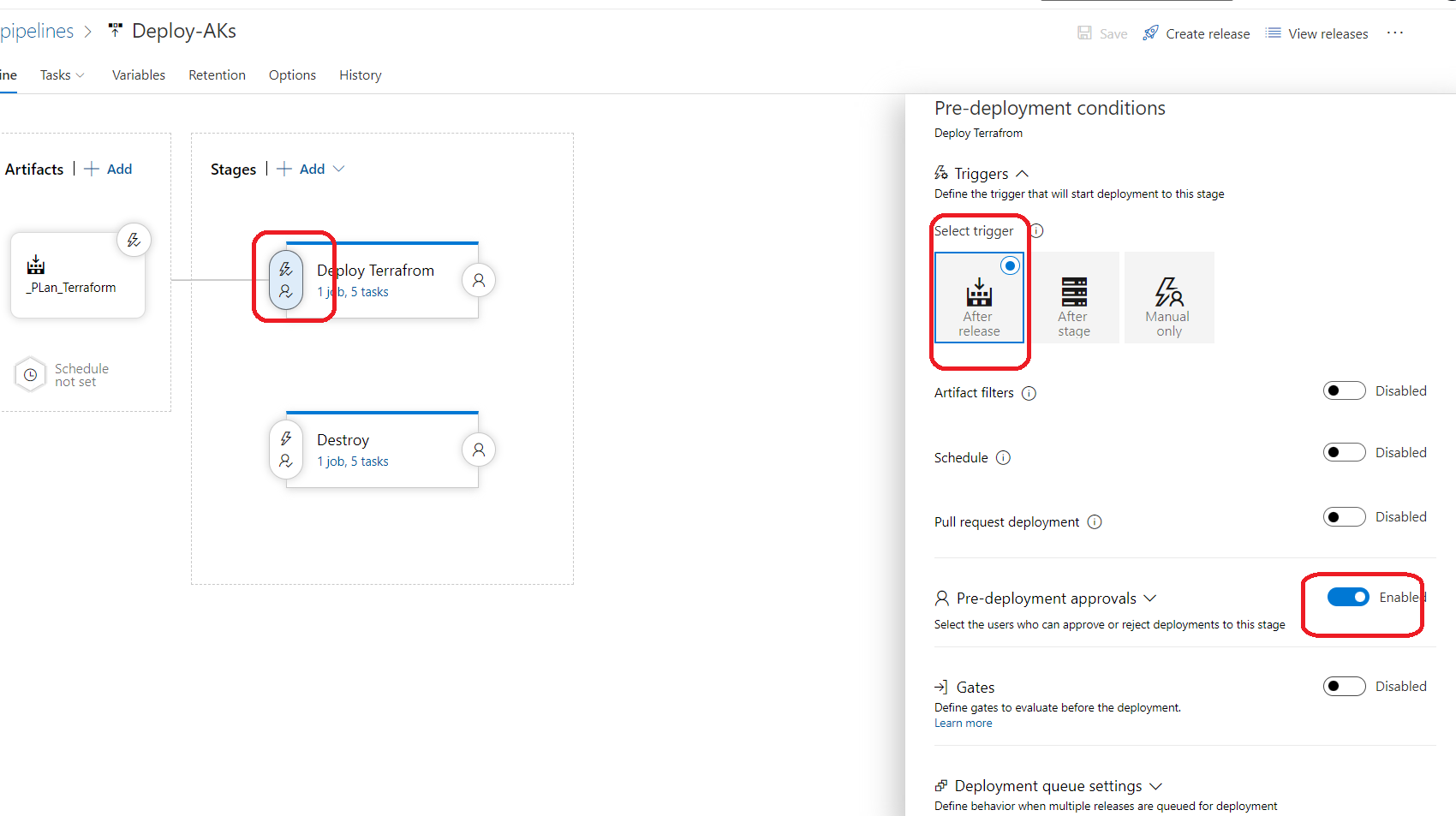

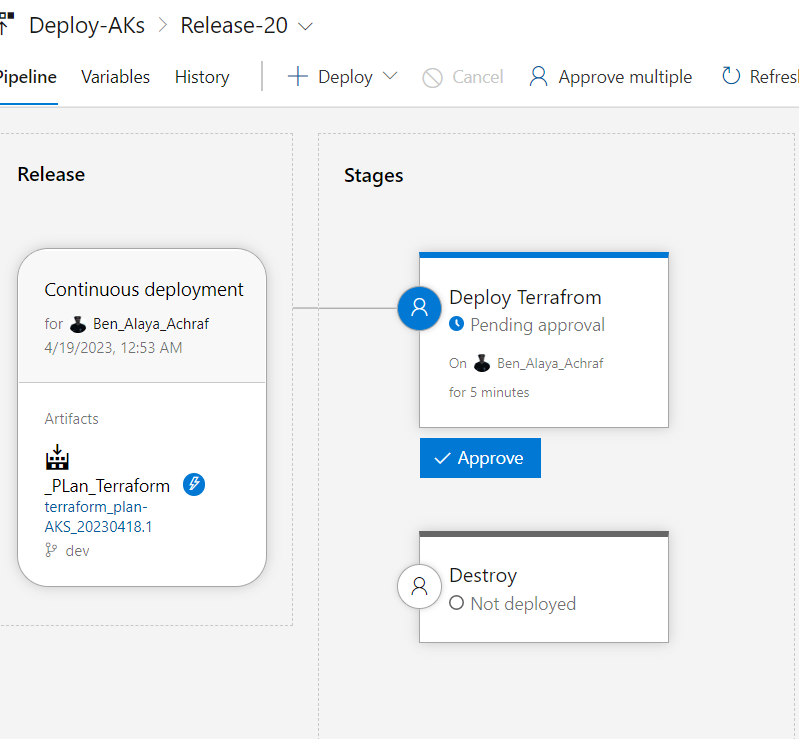

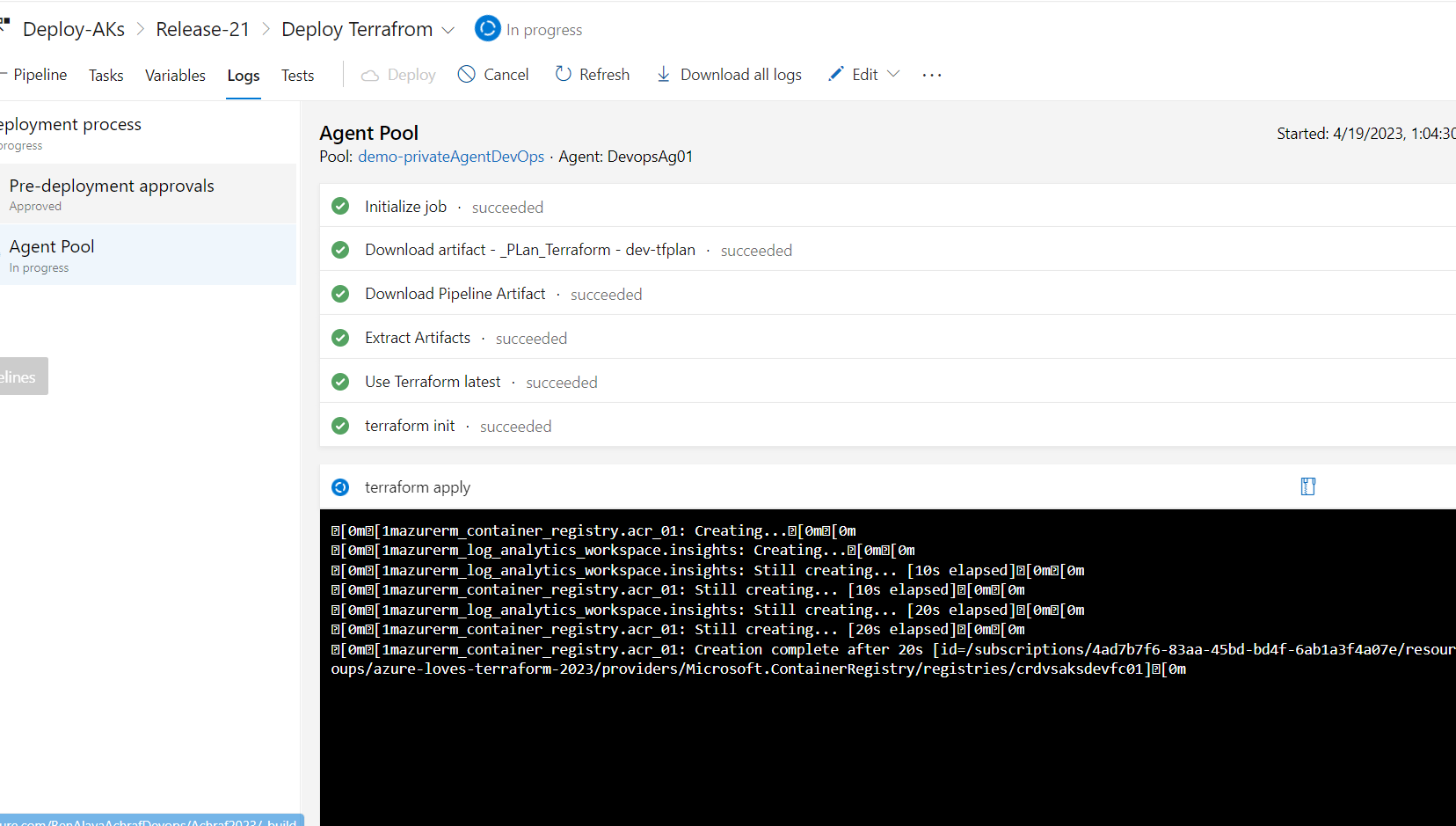

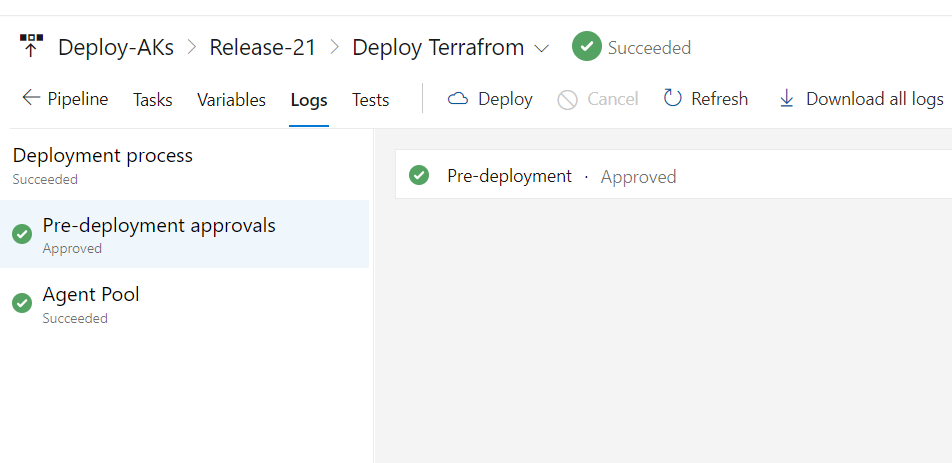

in this first part we will define our CI pipeline ,the main role of this pipline is to build our terraform by validating and creating a tfplan in order to see what are we going to build .

By validating our pipeline ,we runs checks that verify whether a configuration is syntactically valid and internally consistent, regardless of any provided variables or existing state , after it we use the terraform plan command ,that will creates an execution plan, which lets us preview the changes that Terraform plans to make to our infrastructure .

In advanced scenarios that we may see in future articles , we will add a couple of tools like checkov in order to scan our infrastructure configurations to find misconfigurations before they’re deployed , also tfsec which is a static analysis security scanner for our Terraform code .Defining our Pipline :

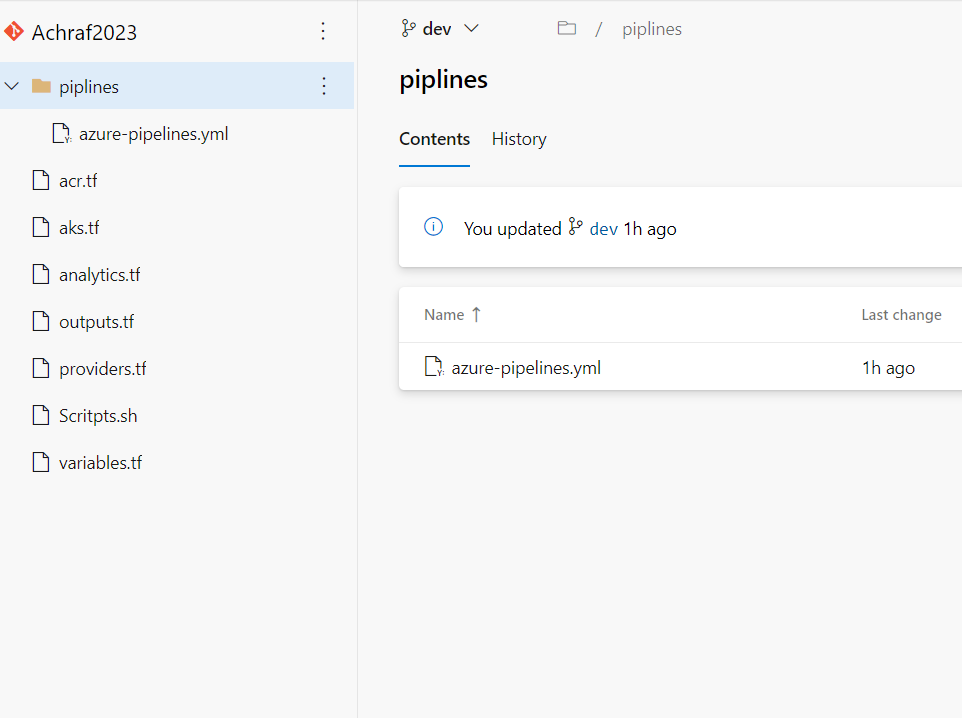

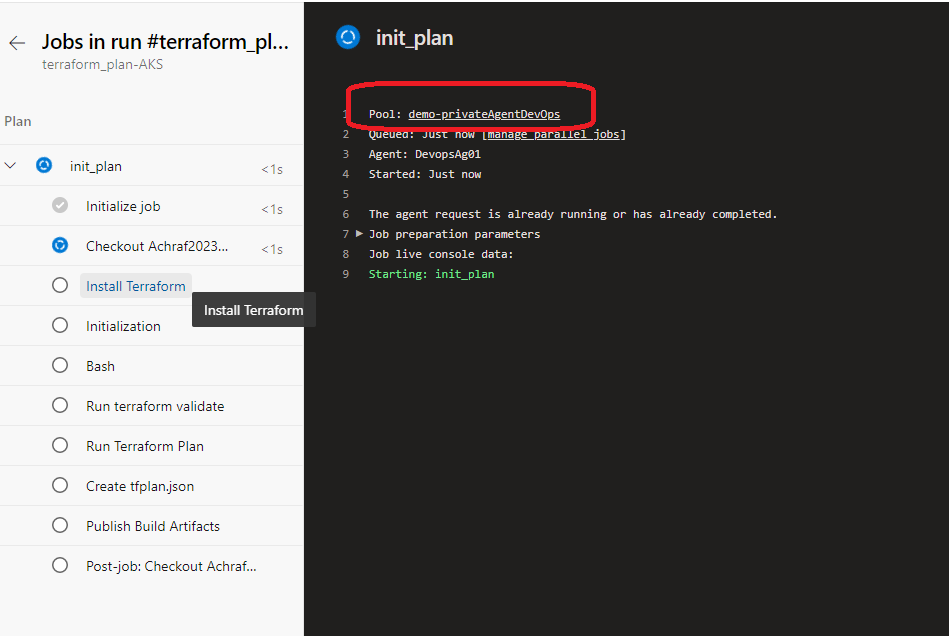

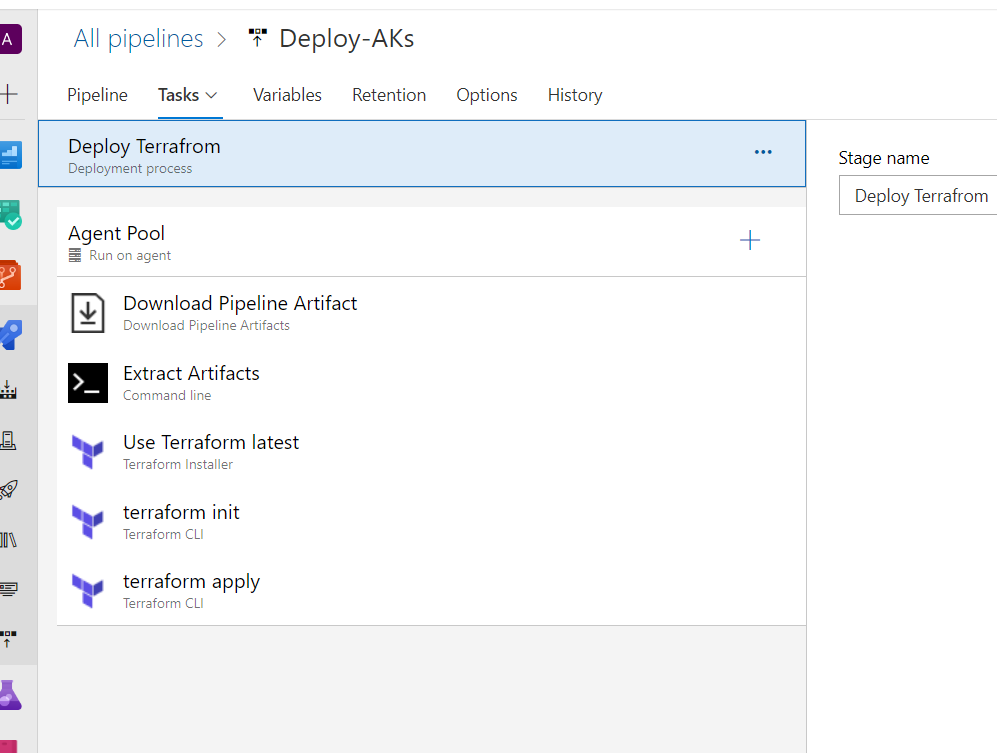

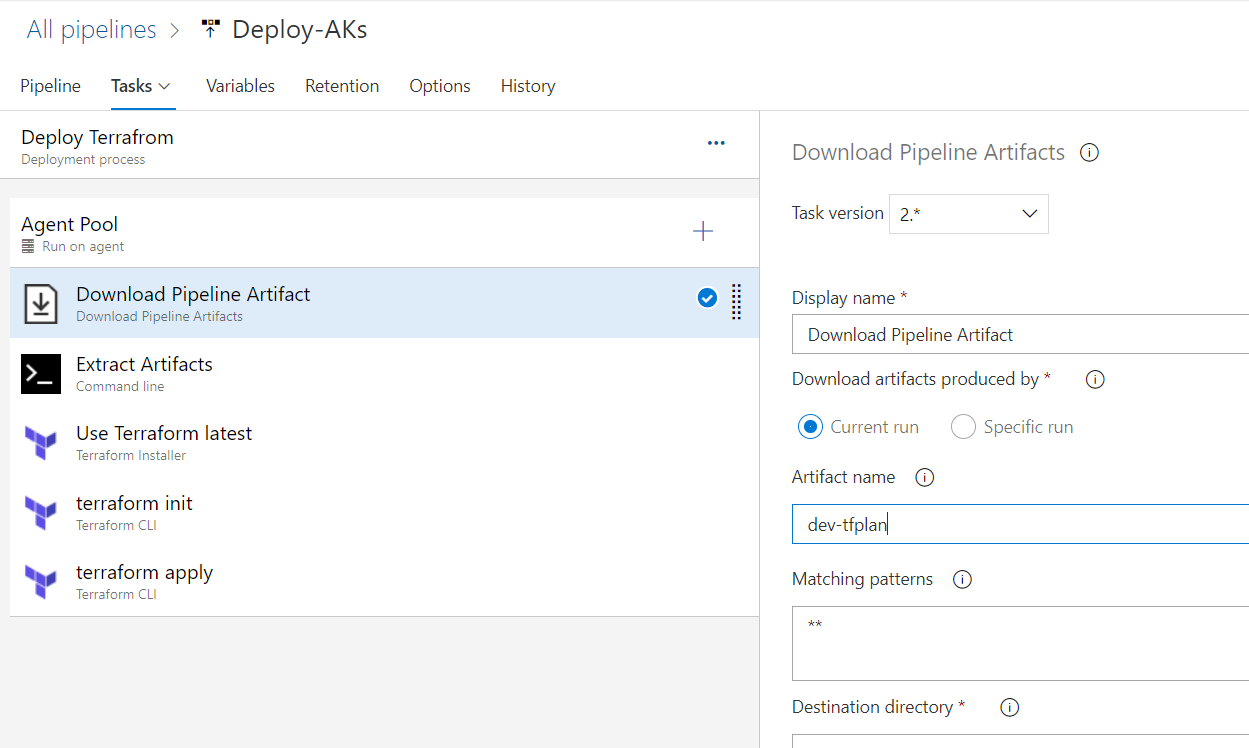

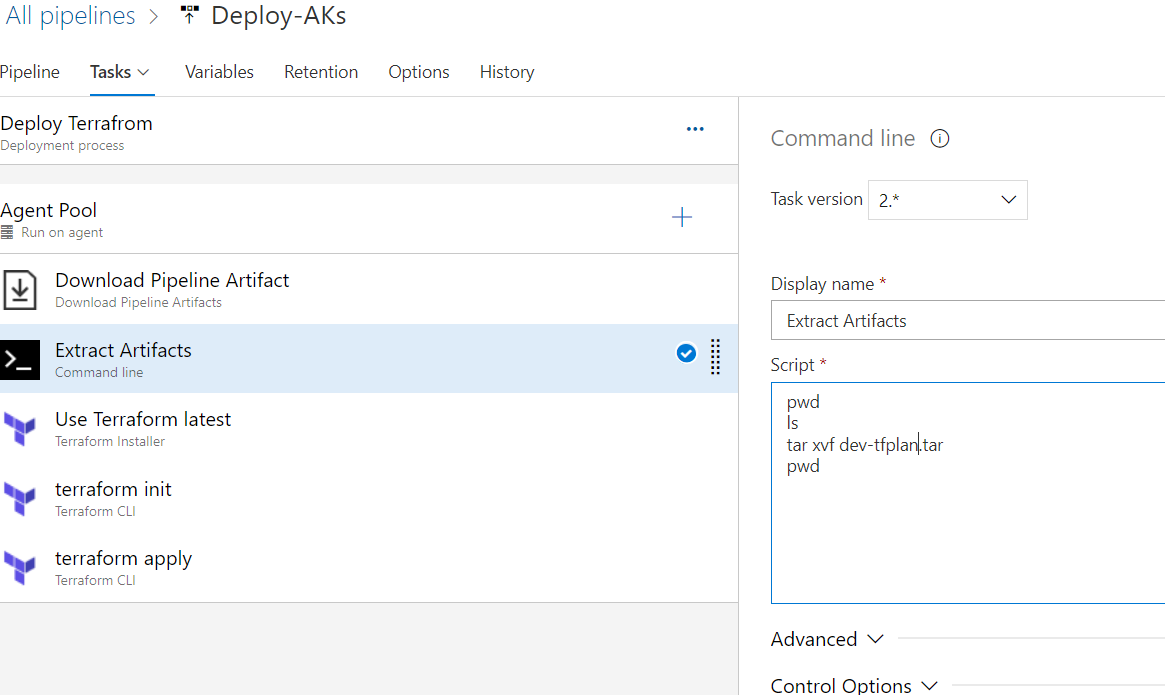

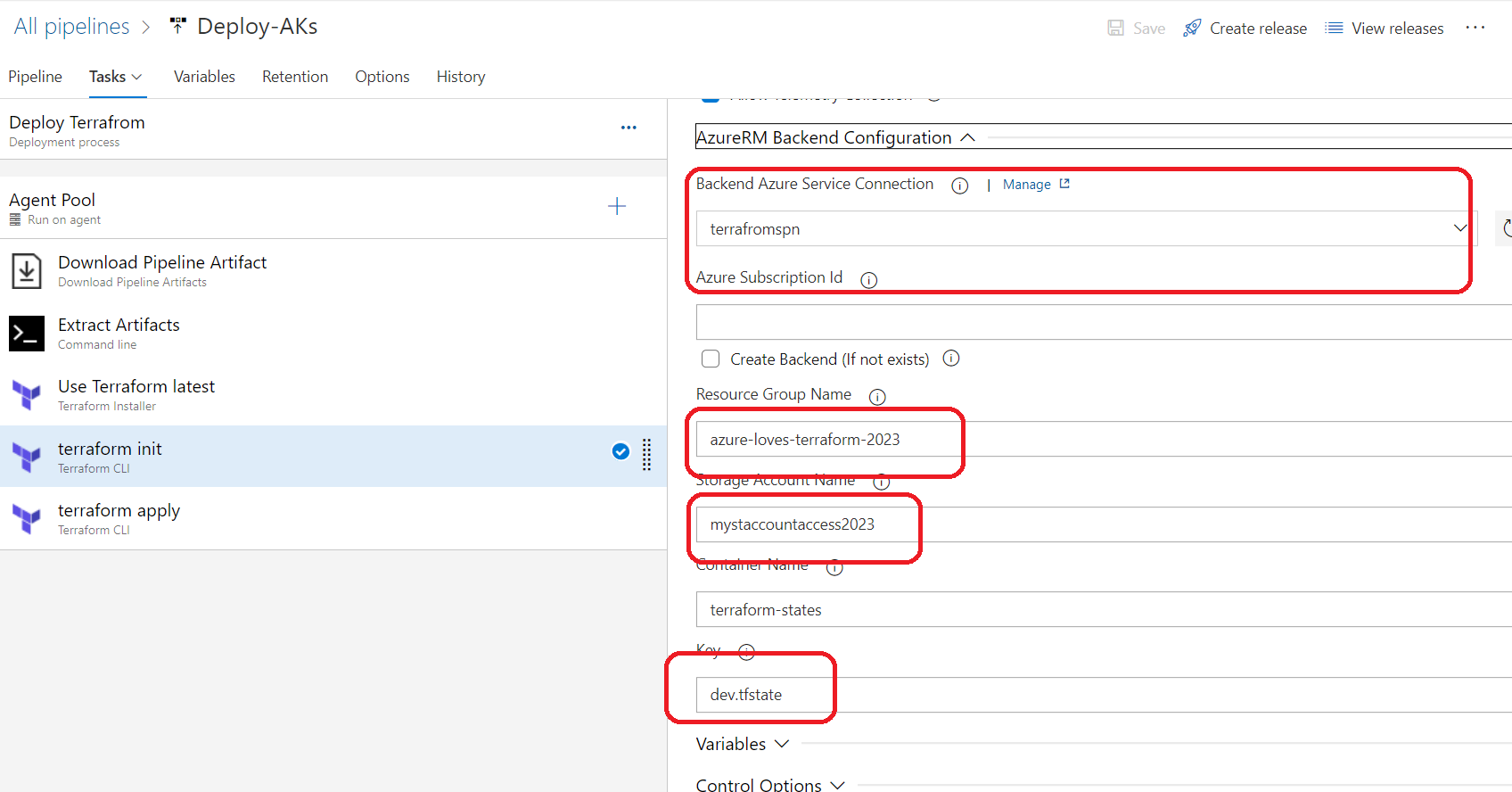

name: $(BuildDefinitionName)_$(date:yyyyMMdd)$(rev:.r) trigger: branches: include: - dev #defining our agent pool and the private agent that we created . pool: name: demo-privateAgentDevOps demands: - Agent.Name -equals DevopsAg01 stages : - stage: terraform_plan displayName: Plan jobs: - job: init_plan steps: - checkout: self - task: charleszipp.azure-pipelines-tasks-terraform.azure-pipelines-tasks-terraform-installer.TerraformInstaller@0 displayName: 'Install Terraform' inputs: terraformVersion: 'latest' - task: TerraformCLI@0 displayName: 'Initialization' inputs: command: 'init' workingDirectory: '$(System.DefaultWorkingDirectory)/' backendType: 'azurerm' backendServiceArm: 'terrafromspn' backendAzureRmResourceGroupName: 'azure-loves-terraform-2023' backendAzureRmResourceGroupLocation: 'francecentral' backendAzureRmStorageAccountName: 'mystaccountaccess2023' backendAzureRmContainerName: 'terraform-states' backendAzureRmKey: dev.tfstate allowTelemetryCollection: true # Validate our configuration - task: TerraformCLI@0 displayName: 'Run terraform validate' inputs: command: 'validate' workingDirectory: '$(System.DefaultWorkingDirectory)' commandOptions: allowTelemetryCollection: true environmentServiceName: 'terrafromspn' backendType: azurerm # creates an execution plan - task: TerraformCLI@0 displayName: 'Run Terraform Plan' inputs: backendType: azurerm command: 'plan' commandOptions: '-input=false -out .tfplan' workingDirectory: '$(System.DefaultWorkingDirectory)/' environmentServiceName: 'terrafromspn' publishPlanResults: 'dev-plan' - script: | cd $(Build.SourcesDirectory)/ terraform show -json .tfplan >> tfplan.json # Format tfplan.json file terraform show -json .tfplan | jq '.' > tfplan.json # show only the changes cat tfplan.json | jq '[.resource_changes[] | {type: .type, name: .change.after.name, actions: .change.actions[]}]' displayName: Create tfplan.json - task: PublishBuildArtifacts@1 displayName: 'Publish Build Artifacts' inputs: PathtoPublish: './' ArtifactName: 'dev-tfplan' publishLocation: 'Container' StoreAsTar: trueAs you can see in our pipeline there is a lot of info that are displayed and exposed , this is only for the demo purpose , but in reality we need to put all this info inside a Library and read from there .aks.tf

Comments 2